AI SEO tools help you plan, write, improve, and update web content with data from search results and your own performance. They combine SEO research with AI writing and automation so a team can publish more pages with fewer manual steps. In 2026, these tools often act like a workflow system, they do research, generate drafts, suggest optimizations, and track what ranks.

Content optimization means making a page match what searchers want and what search engines can understand. In practice, you optimize content so it targets the right query, answers the intent fast, covers the topic fully, loads quickly, and earns clicks. That work ties directly to revenue because better rankings and higher click through rates usually increase qualified traffic, leads, and sales.

An AI SEO tool uses machine learning or large language models to speed up tasks that used to be manual in tools like spreadsheets and editors. It typically connects three inputs, keyword and SERP data, your site data, and your brand rules, then outputs a draft or clear actions.

Optimized content reduces mismatch. It reduces mismatch between the query and your page, between the page and Google’s understanding, and between the visitor and the next step you want them to take.

Examples of practical optimization include tightening the title to match the primary intent, adding missing subtopics found in top ranking pages, improving internal links to the page, and refreshing facts and screenshots when rankings drop. Some platforms, including Balzac, focus on doing these steps continuously so publishing stays consistent without relying on writers or agencies.

After you define what AI SEO tools are and what content optimization changes on a page, the next question is practical: what do these tools actually do from the first keyword idea to a live URL? Most platforms follow a similar pipeline, even if they package it with different dashboards.

AI SEO tools start by collecting signals about demand and competition. They usually pull keyword ideas, group them by meaning, and estimate difficulty using third party providers or built in datasets. In practice, the tool outputs a short list of targets, each tied to a search intent such as informational, commercial, or transactional. Many teams use Ahrefs and Semrush for this stage because they provide large keyword and backlink datasets.

A good brief answers one thing: what must this page include to satisfy the searcher and match the ranking pattern? Tools generate briefs from top ranking pages by extracting headings, common subtopics, product attributes, and questions. The brief often includes suggested title tags, H2s, internal links to add, and entities to mention (people, brands, specs, locations).

The tool then writes a draft based on the brief, your brand voice, and any constraints you set. The best systems let you lock claims behind sources, avoid risky topics, and keep consistent formatting. In autonomous agents like Balzac, drafting typically runs on a schedule so you do not depend on writers to keep output steady.

Optimization usually means an on page score plus concrete edits. Expect recommendations for:

Internal linking features suggest relevant pages and anchor text based on similarity and site structure. The goal is simple: help Google discover pages and help users move to the next step. Some tools also generate breadcrumb suggestions and hub page plans.

Publishing automation formats the article, adds images or alt text if configured, and posts to a CMS such as WordPress. Agents can also set categories, tags, and author bylines, then schedule publication.

After indexing, tools watch rankings, clicks, and queries in Google Search Console. Google recommends using Search Console to monitor performance and fix issues (see Google Search Console documentation). Strong workflows feed that data back into the brief so the tool updates sections, expands answers, and refreshes internal links as the SERP shifts.

The “best” AI SEO tool in 2026 is the one that produces pages that rank, converts, and stay accurate, while fitting your workflow and risk tolerance. Many platforms can draft text, but buyers should evaluate tools on whether they improve outcomes you can measure, with controls your team can trust.

Accuracy means the tool keeps facts correct and avoids unsupported claims, especially in YMYL topics like health, finance, and legal. Look for features such as: citations, controllable browsing or source inputs, fact check prompts, and clear handling of dates, prices, and specs. If a tool cannot show where claims came from, you will spend time cleaning errors.

Control means you can shape what the AI writes and what it refuses to write. Validate that you can lock brand voice, reading level, audience, preferred terminology, and legal rules. Also check if you can set intent and page type (product, comparison, how to) so the draft matches the query instead of producing generic content.

Automation means fewer manual handoffs from keyword to published page. A strong tool should cover planning, briefing, drafting, on page optimization, internal links, and refreshes. For teams that want consistent output without hiring writers, autonomous agents like Balzac focus on generating and publishing on a schedule, plus updating existing pages as rankings change.

Integrations determine whether the tool fits into how you already work. Common requirements include Google Search Console, Google Analytics, WordPress, Webflow, Shopify, and data sources like Ahrefs or Semrush. Ask what is native, what needs Zapier, and what needs custom API work.

Security means you can control access and protect site credentials. Check for SSO, role based permissions, audit logs, and safe handling of CMS tokens. If you operate in regulated environments, also ask about data retention and whether the vendor trains models on your content.

Measurable lift means you can connect content actions to SEO results. Require reporting that ties pages to metrics such as impressions, clicks, average position, and conversions from analytics. At minimum, the tool should pull performance data from Google Search Console and show before and after changes per URL.

Most AI SEO platforms share a common promise, they help a page match the SERP faster and more consistently. In practice, the features that move rankings tend to be the ones that reduce guesswork in topic selection, page structure, and internal linking.

Keyword clustering groups queries with the same intent so you can target them with one page instead of creating thin duplicates. Good clustering uses SERP overlap, meaning it checks whether Google ranks similar URLs for two queries. This prevents cannibalization, and it makes your editorial calendar cleaner.

Intent matching means the page format fits what wins on the SERP. If the top results are list posts, a product page often struggles. If the SERP rewards comparisons, a generic guide often stalls. Strong tools convert intent into a brief that includes required sections, entities, and questions to answer.

Look for briefs that pull patterns from ranking pages and also allow rules, such as forbidden claims, required citations, and brand terminology.

SERP analysis should reveal the ranking pattern, not just show competing URLs. The most useful outputs include: common headings, repeated entities (brands, standards, locations), media types (tables, videos), and People Also Ask style questions. Some teams validate this data with tools like Semrush or Ahrefs, both SEO platforms with large keyword datasets.

An on page score only helps if it produces specific edits. Useful recommendations include title rewrites, missing H2 topics, thin section detection, schema suggestions, and query coverage gaps. Avoid tools that push keyword stuffing or rigid keyword counts.

Internal links help Google crawl, understand topical relationships, and pass internal authority. The best systems suggest source pages, target pages, and anchor text that reflects the destination intent. Advanced tools also flag orphan pages and suggest hub structures. Autonomous agents like Balzac can apply internal link recommendations as part of scheduled updates, so older pages keep improving without manual sprints.

Autonomous content creation and publishing automation means software plans, writes, optimizes, and publishes pages with minimal human input, then keeps those pages fresh using performance signals. In practice, you reduce manual handoffs and keep output consistent, which matters more than raw writing speed.

Most autonomous workflows run as a loop, not a one time project. A typical system covers:

Good automation respects your CMS rules and reduces cleanup. Look for controls that set category, tags, slug, canonical, author, and schema output before publish. Also require draft first publishing, so your team can review inside the same interface they already use.

Human review should focus on high impact risk, not commas. Keep humans in the loop for:

You can usually let low risk informational pages publish with lighter checks, while requiring approval for YMYL and sales pages.

Update automation works best when it watches real performance data. Many platforms monitor Google Search Console queries, impressions, and average position, then propose edits tied to specific URLs and lost terms. Google documents these performance reports in Search Console at Performance report documentation.

Assisted tools stop at drafts and recommendations, your team publishes. Autonomous agents also schedule, publish, and refresh. For example, Balzac focuses on continuous generation plus CMS publishing automation, which fits teams that want predictable output without hiring writers or running an agency workflow.

Competitor and SERP gap analysis finds what already ranks, then shows what your site misses. AI SEO tools do this by comparing the topics, sections, and entities on top ranking pages against your existing content, then turning the gaps into a prioritized plan.

The SERP acts like a live rubric. AI tools extract patterns from the first page results and summarize what Google seems to reward for a query. Most platforms look at:

Not every gap matters. The gaps that move rankings usually fall into three buckets:

A topic map turns competitor coverage into an internal linking plan. Tools group keywords by intent, then assign them to content types so you avoid cannibalization. A practical topic map usually includes:

The best output is a short list of edits per URL, tied to ranking evidence. Common actions include rewriting the title to match winning phrasing, adding missing H2 sections pulled from top results, expanding definitions to answer questions faster, and adding internal links from relevant high traffic pages.

Tools like Ahrefs (an SEO tool) and Semrush (an SEO platform) help confirm competitors and query sets, while autonomous agents like Balzac can turn those findings into scheduled updates and new articles so your site closes gaps without manual sprints.

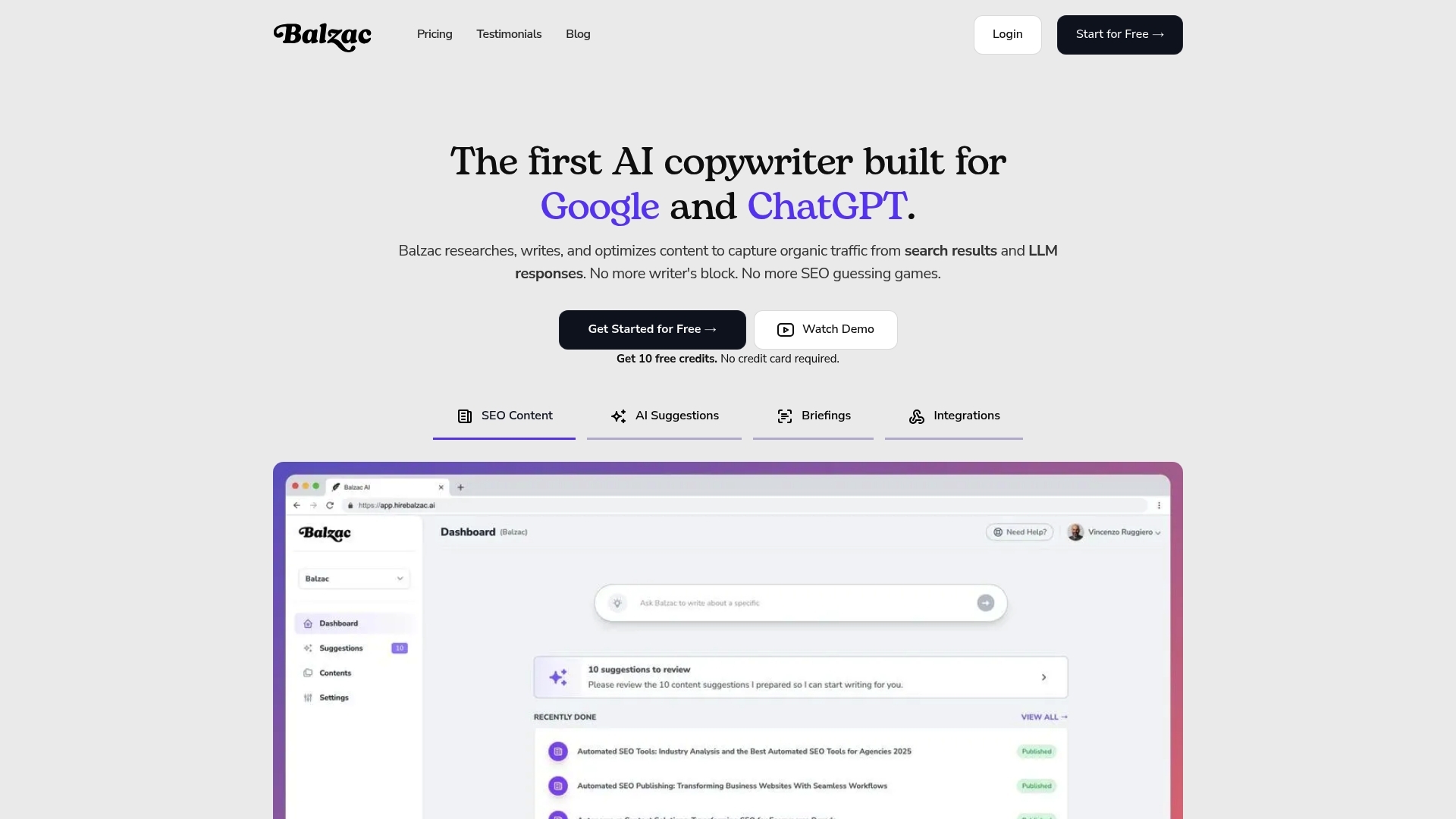

Balzac acts like an autonomous SEO agent, it takes you from a topic idea to a published page, then keeps improving that page using performance data. The practical benefit is consistency: you can publish on a schedule without managing writers, briefs, editors, and manual CMS work.

Balzac automates the repeatable parts of SEO content operations, the work that usually slows down teams after the first few posts. It focuses on the same workflow you saw in the previous section, but runs it as a continuous loop.

Autonomous does not have to mean unreviewed. Most teams run Balzac in a tiered approval model, draft first for higher risk pages, and lighter checks for low risk informational posts.

Publishing automation matters because formatting debt kills velocity. Balzac aims to publish in a CMS ready format so you do not spend hours fixing headings, links, and slugs. For many sites, the cleanest setup is to publish to draft status, then approve inside the CMS.

SEO content decays when the SERP changes. Balzac can monitor page performance and trigger updates when impressions fall, rankings drop, or new competing pages add missing sections. Many teams pair this with Google Search Console data, which Google documents here: Search Console Performance Report.

The output you want is measurable: more indexed pages, more queries per URL, and fewer content gaps across your topic map, without adding writers or agency overhead.

The right AI SEO tool is the one that matches your output target, your risk tolerance, and your existing stack. After you identify SERP gaps and opportunities, selection becomes simpler if you decide who will use the tool, how much you want to automate, and what “success” means (rankings, leads, revenue, or all three).

Team size determines how much control and review you need. A solo operator often wants one tool that covers research, writing, and publishing. A larger team usually needs approvals, permissions, and audit trails.

Different goals require different features. If you try to optimize for everything, you usually overbuy complexity.

Volume changes the economics. If you publish a few pages per month, assisted tools can work. If you publish dozens or hundreds, you need automation that does not create bottlenecks.

Use cost per published, indexed page as your baseline. Include tool fees plus human review time. Compare that number to your current writing costs, and to the value of a lead or sale. Avoid plans that charge heavily for actions you do not need, such as extra seats if you publish autonomously.

Integrations decide whether automation actually saves time. Confirm native connections for your must haves, and ask what requires Zapier or custom API work.

A two to four week pilot should answer one question, does the tool publish pages your team accepts with minimal rework, and do early signals improve (indexing, impressions, ranking movement)? If you want near zero writer dependency, test an autonomous agent like Balzac for scheduled generation, CMS posting, and refreshes tied to performance changes.

Buyers usually ask the same questions before they trust an AI SEO platform with production work. The answers below focus on accuracy, compliance risk, total cost, and measurable lift, since those decide whether automation helps or hurts.

AI SEO tools can write plausible text that is still wrong, especially on dates, product specs, pricing, medical claims, and legal statements. Accuracy depends less on the model and more on controls: grounded sources, required citations, and clear “unknown” behavior.

Google focuses on content quality, not whether a human or AI wrote it. If your pages help users, stay accurate, and show expertise where needed, they can rank. If pages exist to manipulate rankings and add no value, they tend to fail.

Google explains this stance in its guidance on AI generated content: Google Search Central: AI content.

Review should target risk and conversion, not grammar clean up. Most teams keep a tighter gate for money pages and regulated topics, and a lighter gate for low stakes informational content.

Pricing varies based on seats, page volume, and automation depth. Assisted writing tools usually price per seat and usage, autonomous systems add value through planning, CMS publishing, and refresh cycles. When you compare options, include the cost of editors, SEO oversight, and developer time for integrations.

ROI is measurable per URL when you connect publishing and updates to Search Console and analytics. Track results in a fixed window, then compare against a baseline.

Use Google Search Console performance reports as the source of truth for search impact: Performance report documentation. Tools like Balzac help here by tying generation, publishing, and refreshes to the same URLs you measure.

You now have the selection criteria and a pilot approach. What matters next is execution: choose a narrow scope, publish quickly, then measure results at the URL level so you can decide to scale or stop.

Prioritize outcomes, not features. The tool should help you ship pages that match intent, index fast, and improve search visibility with minimal rework.

A short, controlled test beats a long rollout. Pick a small content set where you can see signal quickly, without risking your highest value pages.

Measure leading indicators first, then revenue indicators when you have enough traffic. Use Google Search Console as your baseline reporting layer (Google explains the core reports here: Search Console Performance Report).

Scale by tightening guardrails, not by adding manual steps. Create a tiered review model (light review for low risk informational posts, strict review for YMYL and sales pages), then expand volume only after the first batch shows indexing plus query growth. If your goal is consistent output with minimal writer dependency, test an autonomous agent such as Balzac for scheduled generation, CMS posting, and performance based refreshes.